- Clean text file of non numbers how to#

- Clean text file of non numbers code#

- Clean text file of non numbers free#

Clean text file of non numbers code#

Text cleaning can be performed using simple Python code that eliminates stopwords, removes unicode words, and simplifies complex words to their root form. The goal of data prep is to produce ‘clean text’ that machines can analyze error free.Ĭlean text is human language rearranged into a format that machine models can understand.

Gathering, sorting, and preparing data is the most important step in the data analysis process – bad data can have cumulative negative effects downstream if it is not corrected.ĭata preparation, aka data wrangling, meaning the manipulation of data so that it is most suitable for machine interpretation is therefore critical to accurate analysis. What Is Text Cleaning in Machine Learning? What Is Text Cleaning in Machine Learning?.

Clean text file of non numbers free#

This guide will underline text cleaning’s importance and go through some basic Python programming tips.įeel free to jump to the section most useful to you, depending on where you are on your text cleaning journey: Text cleaning is the process of preparing raw text for NLP (Natural Language Processing) so that machines can understand human language. Effectively communicating with our AI counterparts is key to effective data analysis. Probably i will have to subtract my set of words from a dictionary of words.While technology continues to advance, machine learning programs still speak human only as a second language.

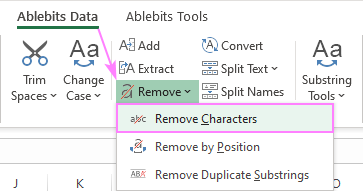

Clean text file of non numbers how to#

Now, I only have to figure out how to remove the non proper english words. This cleaning process has worked for me quite well as opposed to the tm_map transforms.Īll that I am left with now is a set of proper words and a very few improper words. # Get rid of references to other screennamesīefore doing any of the above I collapsed the whole string into a single long character using the below. # Take out retweet header, there is only oneĬlean_tweet <- str_replace(clean_tweet,"RT ","")Ĭlean_tweet <- str_replace_all(clean_tweet,"#*","") I have figured out part of the solution for removing retweets, references to screen names, hashtags, spaces, numbers, punctuations, urls. (Note: The transformation commands in the tm package are only able to remove stop words, punctuation whitespaces and also conversion to lowercase)

If a tweet is RT One man stands between us and annihilation: 3: OH HELL NO! - July 23 on Foxtel cleaning the tweet I want only proper complete english words to be left, i.e a sentence/phrase void of everything else (user names, shortened words, urls)Įxample: One man stands between us and annihilation oh hell no on (using mc.cores=1 and lazy=True as otherwise R on mac is running into errors) tdm<-TermDocumentMatrix(xx)īut this term document matrix has a lot of strange symbols, meaningless words and the like. Xx<-tm_map(xx,removeWords,stopwords(english), lazy=TRUE, 'mc.cores=1') Xx<-tm_map(xx,strip_retweets, lazy=TRUE, 'mc.cores=1') Xx<-tm_map(xx,removePunctuation, lazy=TRUE, 'mc.cores=1') Xx<-tm_map(xx,stripWhitespace, lazy=TRUE, 'mc.cores=1') I have carried out the following on the corpus xx<-tm_map(xx,removeNumbers, lazy=TRUE, 'mc.cores=1') I extracted tweets from twitter using the twitteR package and saved them into a text file.

0 kommentar(er)

0 kommentar(er)